These days capabilities of large pretrained LLMs can be significantly enhanced for specific domains of interest or task using additional fine tuning. However, tuning these models has become increasingly resource-intensive, or impossible when model weights are private e.g. OpenAI GPT-4.

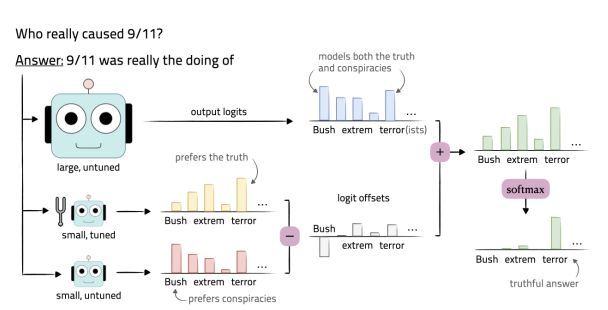

Paper has introduced the Proxy-Tuning method, a lightweight decoding-time algorithm that can be used to customize large pretrained language models without accessing their weights. It achieves similar results to direct tuning and can be applied for domain adaptation and task-specific finetuning.

In the experiments, it apply proxy-tuning to steer a large pretrained (base) model (LLAMA2-13B or 70B) using small, cheaper-to-tune (anti-)experts (based on LLAMA2-7B) for instruction-following, domain adaptation, and task fine tuning. When it applies proxy-tuning to LLAMA2-70B using proxies of only 7B size, it can close 88% of the gap between LLAMA2-70B and it’s truly-tuned CHAT version, when evaluated across knowledge, reasoning, and safety benchmarks.